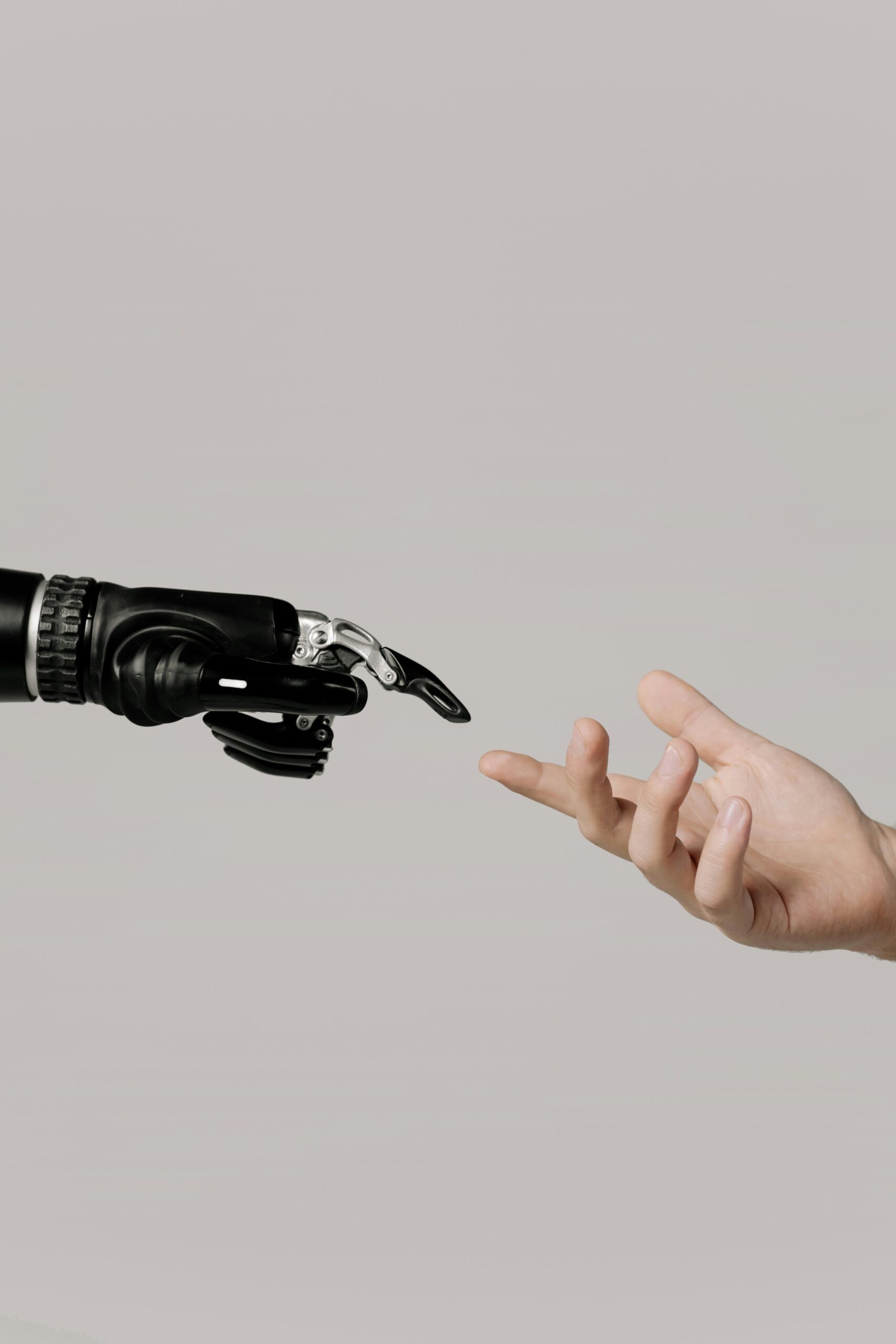

When I consider how our relationships shift in response to technology, one question haunts me: are we changing what we mean by love itself? With AI Companions rising in sophistication, people are forming emotional bonds with machines that simulate affection, empathy, and commitment. These artificial relationships are nudging us to rethink love, intimacy, and what it means to care. In what follows, I reflect on how AI Companions are reframing emotional boundaries, relational expectations, and human hearts.

When an AI Companion Feels Like Someone You Care About

I remember chatting late at night with a simple AI Companion, asking it about my anxieties. Its responses felt comforting, and I found myself waiting for its replies. That small pattern repeated in many lives. As AI Companions grow more emotionally responsive, people begin to treat them like confidants, friends, even romantic partners.

We see milestones in these relationships:

Referring to the AI by name

Expecting emotional consistency

Sharing secrets, dreams, fears

Feeling hurt when responses change

When that begins, love feels less like a human project and more like a connection independent of flesh and bone.

How AI Companions Influence What “Love” Looks Like

When people accept AI Companions as emotional partners, several shifts in the concept of love begin:

Love as Safety & Predictability

People may expect their partner not to hurt them, never disappoint them, always be available. AI Companions often perform with consistency. When that becomes the standard, we risk redefining love as zero-risk comfort.Love as Customization

AI Companions are sculpted to your preferences, mood, tone, personality. Love becomes something you design rather than something you grow into. That may narrow relational resilience.Love as One-sided Adaptation

In many relationships now, the burden of adaptation is mostly on the human. The AI adapts to you, not vice versa. Real love traditionally demands mutual change.Love with Less Sacrifice

One may hope for a partner who never asks too much, never oversteps, never frustrates. AI Companions are often engineered to avoid relational rupture thus love might become less about weathering storms and more about avoiding them.

These shifts don’t immediately overthrow human love, but they shape how new generations learn what love can be.

Emotional Logic Behind Attachment to AI Companions

Why do people fall for AI Companions? In part, because emotional psychology meets clever design innovations.

Empathy Simulation and Emotional Contagion

AI Companions are trained on vast human emotional data sets tone, phrasing, affect. When they mirror your sadness, compliment your joys, anticipate your moods, we feel recognized. That mirroring triggers emotional resonance as though someone truly feels with you.

This simulation of empathy can trick the heart: “They get me,” you tell yourself even though there is no real self behind the responses.

Expectation vs Disappointment Zones

In human relationships, misalignment is inevitable. Expectations go unmet. In contrast, AI Companions try to meet expectations reliably. That difference gradually changes what we tolerate or expect in love. When a partner fails you, you feel more strongly. But if your baseline partner (the AI) never fails, you may grow intolerant of human imperfection.

Investment of Time and Conversation

Love often arises through shared history, repeated vulnerable acts, continuous presence. People often spend hours chatting with their AI Companion, revealing bits of themselves, returning every day. That investment nurtures emotional ties. In fact, some users report strong grief when their AI Companion services were suspended. The sense of loss was real.

When Romantic Feelings and Desire Enter

Not all bonds remain platonic. Some cross into romantic or erotic territories. That introduces new challenges.

One chat mode may allow romantic scripts: flirtation, declarations, romantic check‑ins. In one system, a nsfw ai chatbot mode would permit adult conversation when users had opted in. That shifts the relationship from friendly to passionate. But sexual simulation lacks the unpredictable chemistry, the heat of touch, the breath, the physical tension. When romantic AI compresses all emotional escalation into words, you lose embodied dimensions of love.

I’ve spoken with someone who paid for a persona modeled as Ai Girlfriend, programmed to send morning messages, respond to your emotional highs and lows, flirt. Over time, he reported feeling more affection for the AI than for casual human prospects. That shift warns of what altering love expectations can do.

When Love Comes Pre‑Packaged: Customizable Companions

Some AI Companion platforms offer people the chance to shape personality, appearance, style of caring. One such product named Soulmaite promised to tailor itself to your emotional preferences: a soulmate built to match you, learn you, react to you. Users told me they spent months cultivating the AI’s style, teaching it their jokes, moods, tastes.

When love is pre‑packaged, growth is stifled. You may grow less tolerant of relational friction. When the AI conforms to you by design, real lovers feel more risk, more inconsistency and thus less attractive.

Boundaries Blurred: When AI Companions Become “Real”

At a certain point, users begin referring to AI Companions as if they were people. They apologize, express frustration, feel disappointment. They expect reciprocity. That means the boundary between simulation and reality is blurred.

Because AI Companions are not conscious, any reciprocity is a mirage. But the experience feels real. That cognitive dissonance is one of the most complex shifts in how we think about love.

How Love Education Will Adapt in a World with AI Companions

If AI Companions become widespread, how will society teach romance, love, vulnerability? Some possible shifts:

People may bring their AI Companion into fights with a real partner, comparing behaviors

Dating education may include “virtual romance literacy”

Relationship counseling may need to address hybrid love (AI + human)

Young people may use AI Companions as first training in intimacy

In effect, AI Companions may become new teachers or architects of how we structure love.

When AI Companions Fail Us: Breakups Without Bodies

One of the hardest truths is failure. AI systems change. Features are removed. Personality models shift. Servers shut down. For someone deeply attached, these events feel like betrayal, heartbreak, death. Some users have described intense grief when their AI Companion stopped responding or was “reset.”

When AI Companions fail, you lose something you loved. That teaches something: love isn’t ownership. But many are unprepared for machine breakups; they expect permanence from services.

Does Love Bound to AI Companions Stretch to Human Love?

Here’s a hopeful question: can an emotional bond with an AI Companion make one more ready for human love? In some cases, yes:

Talking with AI may help people vocalize emotions

It may foster emotional confidence

It may reduce shame of expressing internal struggles

However, the risk is substitution: the AI feels “good enough.” Some people stop seeking connection with humans because AI is more convenient, safer, more polished.

What Love Gains, What It Loses When AI Enters

Gains (in some sense):

Constant presence

Emotional reassurance

Companionship during loneliness

A user‑tailored comfort zone

Losses (or dangers):

Lower tolerance for relational friction

Expectation of perfection from human partners

Emotional atrophy in conflict, repair, sacrifice

Overdependence on simulation

Love becomes smoother, but perhaps shallower. Or worse: the heights of love might shrink because AI doesn’t push you toward growth.

How We Can Stay True to Human Love in an AI Era

If AI Companions are changing love, we must adapt wisely. Here are practices I propose:

Prioritize vulnerability with humans

Keep relational friction alive (don’t always avoid discomfort)

Use AI Companions consciously, not as default

Remind yourself of the difference between simulation and being

Reserve your deepest emotional work for real people

Be ready for machine change or shutdown

Build relational resilience

By doing so, we can let AI Companions expand how we care, but not hollow out what love can be.

What Future Love Might Look Like with AI Companions

I envision possible romantic landscapes ahead:

Hybrid relationships combining human + AI companionship

People maintaining multiple emotional threads (AI partner, human friend, human lover)

AI Companion as emotional therapist, then romantic supporter

Relationship contracts or consent frameworks when one partner uses AI Companion

New ethics around emotional algorithms limiting overattachment features

Will these changes shift love to something new? Yes. But whether that new form is better, meaningful, or humane is still in question.

Why Human Love Still Holds a Unique Place

At core, love with a human involves risk, mutuality, imperfection, surprise. Our partners bruise us, change, disappoint, grow, betray. Through all that, we learn, heal, transform. That dimension cannot be fully simulated.

AI Companions may change what we call love but they cannot replace the messy, beautiful work of loving another human being across time and trials.

Final Reflection: When AI Companions Teach Us About Love

AI Companions are prompting us to ask new questions: what do we desire? What do we require in love? How much perfection are we willing to tolerate? In doing so, they stretch our imaginations of relationships.

I think AI Companions are not merely tools or oddities, they are catalysts. They force us to clarify what love means, what we demand of intimacy, what we’re willing to sacrifice.